By Taha Yasseri, University College Dublin [This article first appeared in The Conversation, republished with permission]

The human brain is a marvellous machine, capable of handling complex information. To help us make sense of information quickly and make rapid decisions, it has learned to use shortcuts, called “heuristics”. Most of the time, these shortcuts help us to make good decisions. But sometimes they lead to cognitive biases.

Answer this question as quickly as you can without reading on: which European country was hit the hardest by the pandemic?

If you answered “Italy”, you’re wrong. But you’re not alone. Italy is not even in the top ten European countries by the number of confirmed COVID cases or deaths.

It is easy to understand why people might give a wrong answer to this question – as happened when I played this game with friends. Italy was the first European country to be hit by the pandemic, or at least this is what we were told at the beginning. And our perception of the situation formed early on with a focus on Italy. Later, of course, other countries were hit worse than Italy, but Italy is the name that got stuck in our heads.

The trick of this game is to ask people to answer quickly. When I gave friends time to think or look for evidence, they often came up with a different answer – some of them quite accurate. Cognitive biases are shortcuts and shortcuts are often used when there are limited resources – in this case, the resource is time.

This particular bias is called “anchoring bias”. It occurs when we rely too heavily on the first piece of information we receive about a topic and fail to update our perception when we receive new information.

As we show in a recent work, anchoring bias can take more complex forms, but in all of them, one feature of our brain is essential: it is easier to stick to the information we have stored first and try to work out our decisions and perceptions starting from that reference point – and often not going too far.

Data deluge

The COVID pandemic is remarkable for many things, but, as a data scientist, the one that stands out for me is the amount of data, facts, stats and figures that are available to pore over.

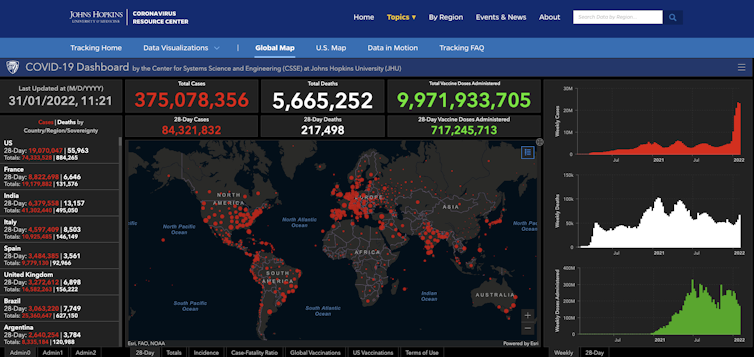

It was rather exciting to be able to regularly check the numbers online on portals such as Johns Hopkins Coronavirus Resource Center and Our World in Data, or just tune in to almost any radio or TV station or news website to see the latest COVID statistics. Many TV channels introduced programme segments specifically to report those numbers daily.

Johns Hopkins data portal

However, the firehose of COVID data that came at us is not compatible with the rate at which we can meaningfully use and handle that data. Our brain takes in the anchors, the first wave of numbers or other information, and sticks to them.

Later, when it is challenged by new numbers, it takes some time to switch to the new anchor and update. This eventually leads to data fatigue, when we stop paying attention to any new input and we forget the initial information, too. After all, what was the safe length for social distancing in the UK: one or two metres? Oh no, 1.5 metres, or 6 feet. But six feet is 1.8 metres, no? Never mind.

The issues with COVID communication are not limited to the statistics describing the spread and prevalence of the pandemic or the safe distance we should keep from others. Initially, we were told that “herd immunity” appears once 60%-70% of the population has gained immunity either through infection or vaccination.

Later, with more studies and analysis this number was more accurately predicted to be around 90%-95%, which is meaningfully larger than the initial number. However, as shown in our study, the role of that initial number can be profound and a simple update wasn’t enough to remove it from people’s minds. This could to some extent explain the vaccine hesitancy that has been observed in many countries; after all, if enough other people are vaccinated, why should we be bothered to risk the vaccine’s side-effects? Never mind that the “enough” might not be enough.

The point here is not that we should stop the flow of information or ignore statistics and numbers. Instead, we should learn when we deal with information to consider our cognitive limitations. If we were going through the pandemic all over again, I would be more careful with how much data exposure I got in order to avoid data fatigue. And when it comes to decisions, I would take time not to force my brain into shortcuts – I would check the latest data rather than relying on what I thought I knew. This way, my risk of cognitive bias would be minimised.

Taha Yasseri, Associate Professor, School of Sociology; Geary Fellow, Geary Institute for Public Policy, University College Dublin

This article is republished from The Conversation under a Creative Commons license. Read the original article.